Prelude to Collapse: A World Already Burning

Green Eye Fever is a short film about control, collapse, and authorship in the age of the machine. Told through the lens of Four broadcast networks unraveling in real time, it documents the moment civilization loses its signal… when truth dissolves into noise and even the news begins to pray.

The story unfolds like recovered footage: each anchor reporting with integrity as the world’s sanity decays, each frame infected by the very organism they describe. Through layers of AI-generated imagery, hand-crafted motion design, and editorial performance, the film blurs where reality ends and transmission begins.

Created as both a proof of concept and narrative prologue, Green Eye Fever establishes the world that my next story inhabits, a post-collapse society haunted by the echoes of these broadcasts, where survivors live beneath the mythology of what the cameras captured before the lights went out.

Design Manifesto: The Birth of the Fever

At its core, Green Eye Fever is a film about communication as contagion. I wanted to explore what happens when information itself becomes infected, when the tools we use to document reality begin rewriting the story.

Stylistically, the short fuses AI generation, motion graphics, and cinematic editing into one evolving language. The early scenes imitate the order of 1990s broadcast design: calm, balanced, trustworthy. As the story progresses, that structure erodes, soon typography breaks, voices distort, and the visuals devolve into feverish hallucination.

The process mirrored the story. Every element: from AI prompts to compositing choices, was an act of containment and surrender. I treated the edit like an experiment in authorship: what happens when the editor becomes the Director, creating the dream while being consumed by it?

In the end, Green Eye Fever isn’t about the infection we see, it’s about the one we hear. The slow collapse of order. The seduction of distortion. The beauty of losing control and realizing that creation itself might be the contagion.

Behind the Scenes:

Making “Green Eye Fever”

Concept Art & Character Design

Survivors

The first stage of design focused on the survivors, ordinary people pushed to extremes. Each concept began as a shape-driven Procreate sketch, built around silhouette, rhythm, and posture rather than detail. Once the visual language was established, I reinterpreted the figures in Photoshop: testing realism, texture, and emotional tone. The result was a study in grit and willpower, where every fold of fabric and shadow of fatigue told the story of endurance.

Infected

“The infected were designed as evolving forms. Their flesh and identity bending under the weight of transformation. Early iterations focused on clarity of silhouette for potential animation, ensuring movement and form could read instantly. Later passes leaned into realism, exploring how infection distorts anatomy while leaving behind a faint, haunting trace of who they once were. Each sketch became a visual metaphor: creativity itself as a beautiful contagion.”

Artificial Genesis: Conjuring Worlds from Code

Once the hand-drawn visual language was set, I used AI tools as an extension of that craft, not a shortcut. Midjourney acted as a concept camera, helping expand the world’s scale, tone, and atmosphere.

Each generation began with intent, guided by the original sketches in color, light, and form. The goal wasn’t randomness but controlled discovery: finding surprise without losing authorship.

These explorations, from anchors to unseen antagonists and survivors, became raw material for motion: compositing, and world-building. Every image was painted over, edited, and reworked to align with the hand-crafted world.

ANN (Associated National News): Rodger Carlton

TST (Top Story Tonight): Sarah Wong

EEN (Eagle Eye News): Elroy Masterson

WHN (World Headline Network):Alexis Alexander

Elroy Masterson (EEN) “REDESIGN”

Peter Lovecraft (Whistleblower)

Elijah Dyer

Machine Rituals: Constructing the Unreal

Many of Midjourney’s generated props, signs, headlines, and documents arrived distorted or unreadable. Each element was meticulously rebuilt in Photoshop, replacing warped text and restoring legibility while preserving the AI’s raw texture.

The goal was to keep the surreal aesthetic intact while giving every frame narrative clarity.

Original Renders from Midjourney

Photoshop Clean Up and Typography Replacement

Broadcast Faith: Voices of a Doomed World

Each network served as both a design system and a character, a distinct voice within the film’s collapsing world. From anchor styling to lower-thirds and logos, every detail sustained the illusion of real broadcast media documenting the outbreak in real time.

Original Character Design From Midjourney

Updated Character Replaced in Runway ai

The original Elroy Masterson felt awkward in motion, his body language didn’t match the authority and weight that his voice conveyed in the final edit. I reworked the character through Midjourney, refining his posture, proportions, and wardrobe to better reflect his dominance on screen.

Using Runway AI’s character replacement system, I seamlessly integrated the new version into the broadcast composite, preserving continuity while elevating the performance’s cinematic weight.

Cinematic Systems: Building Reality from Fragments

“each AI platform became a department in a one-person studio.”

Finding control in a medium built on limitation became part of the art itself, if the footage felt too mechanical, the illusion broke. If censorship stripped all sense of danger, the story lost its pulse. Every tool demanded balance between imperfection and intention.

Midjourney set the tone, unmatched in light, texture, and atmosphere, but its motion tools limited by its inability to create character animation from.

Pika became the motion lab. Its camera tools made testing easy, but results often broke down: morphing artifacts, blur, and unstable imports. Still, it helped shape the film’s rhythm and emotional pacing.

Runway became the backbone. Its Gen-4 tools let me merge acting, camera control, and voice in one system. I physically performed each scene and drove digital characters using custom ElevenLabs voices tuned for tone and emotion.

In short, Midjourney built the world, Pika explored its motion, and Runway brought it to life, a workflow that blurred the line between direction, performance, and authorship.

From Prompt to Performance: Evolving AI Acting

Demonstrating the transition from text-driven speech to true, expressive performance.

Rendering Reality: Visual Fidelity Across Platforms

A visual comparison highlighting how each system interprets motion, depth, and stability under identical conditions.

| Platform | Primary Strength | Limitation | Ideal Use Case |

|---|---|---|---|

| Midjourney | Exceptional visual fidelity and cinematic lighting. | No real acting or lip-sync capability. | Concept art, animated stills, backgrounds. |

| Pika | Good for camera motion and quick tests. | Image distortion and blur with imported artwork. | Motion studies and prototype sequences. |

| Runway | Best for performance and voice sync integration. | Limited camera control options. | Acting-driven scenes and final animation. |

Signal Interference: The Gospel of Censorship

Early in production, I encountered the strict content moderation systems built into most generative AI tools. Because Green Eye Fever explores infection, chaos, and decay through a cinematic horror lens, many prompts triggered automated safety filters.

Rather than describing scenes literally, I had to develop indirect, coded language that suggested violence without stating it. Terms like “red liquid”, “medical emergency,” or “uncontained incident” often produced better, policy-safe results than direct wording.

These limitations ultimately forced more creativity in prompt design and composition. I leaned heavily on cinematic lighting, atmosphere, and expression to imply danger while staying compliant with community guidelines.

Below are examples of the system rejections that shaped this part of the workflow.

Images created in Midjourney

“The censorship systems became part of the creative process, they pushed me toward smarter visual storytelling, where implication replaced shock.”

Synthetic Cinema: Machines That Dream in Motion

Each of these short clips shows how Pika handled image-to-video generation using the same source prompts tested in Runway and Midjourney.

While Pika’s footage was often distorted or “morphed,” I used editing, motion graphics, and color grading to hide most of these flaws in the final film, turning unstable outputs into something cinematic.

Observation:

Pika offered the most freedom but required the most cleanup/Iterations.

Runway provided cleaner results but enforced stricter moderation.

Midjourney was strongest for tone and composition but lacked animation flexibility.

Original Midjourney Render

Runway censorship

Pika Motion Render

Original Midjourney Render

Runway censorship

Pika Motion Render

While Runway the highest visual motion treatment on a consistent basis, Pika was able to offer usable footage that otherwise wouldn’t have been made at all.

Encoded Motion: From Broadcast to Contagion

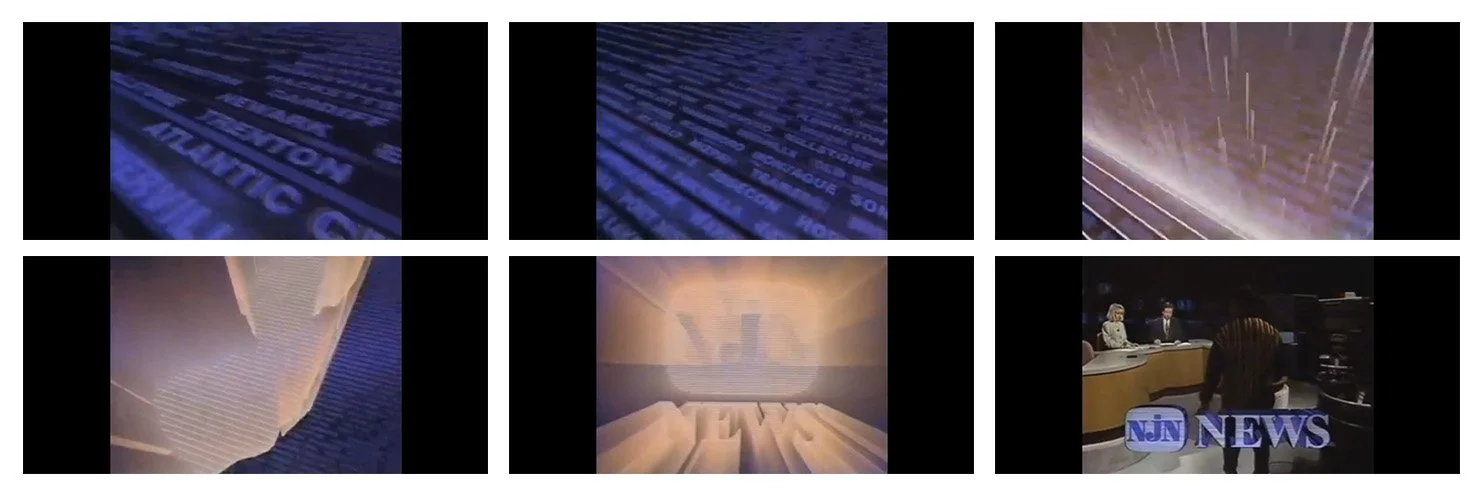

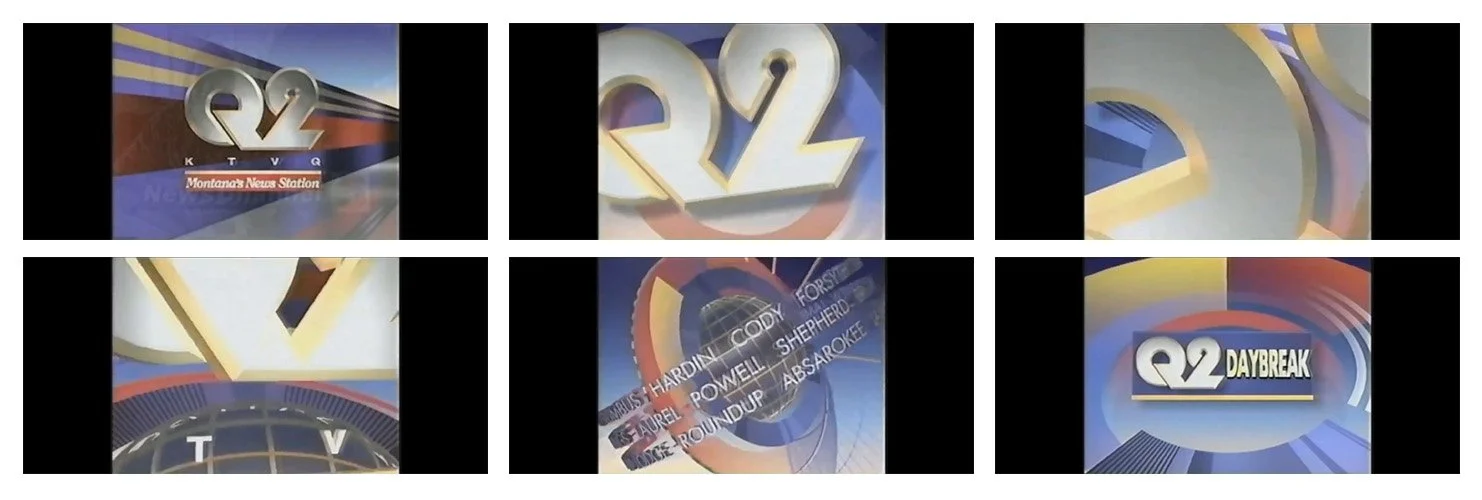

Broadcast Motion References

These sequences reinterpret the design language of late-20th-century news graphics. A time when trust was engineered through rotation, light, and sound. The WHN and ANN idents were crafted as relics of stability: crisp motion, clean gradients, and proud typography masking the quiet onset of decay. Their rhythm feels familiar, but underneath the composure, the infection is already in the sign

NEW JERSEY: MONTCLAIR - WNJN (PBS) -1993

NEVADA: LAS VEGAS - KVVU (FOX) -1998

MONTANA: BILLINGS - KTVQ (CBS) -1992

ANN Breaking News Bumper (2.5s)

Accelerated transitions, hard wipes, and sonic urgency foreshadow chaos beneath order. Mirroring real world pacing when relaying breaking news.

WHN Intro (15s)

Establishes network authority through slow rotation, 3D logo extrusion, and confident pacing. Mirroring real world hypnotic effect that news programs use on views.

Broadcast Motion Design: Order Before the Collapse

Each network was built as a distinct visual and ideological voice within the film’s world. From anchor styling to lower-thirds and logo systems, every design choice reinforced the illusion of authentic broadcast media struggling to report on the “Green Eye Fever” outbreak

Sigils & Seals: The Infection Beneath the Signal

The sigils began as digital rituals, geometric designs that pulse and breathe like living code. They symbolize the Organism’s reach: an unseen force rewriting language, rhythm, and flesh.

Sigil Alphabet designed in procreate

These looping forms were animated to mimic psychic interference patterns, visuals that feel both sacred and diseased. As the signal distorts and the news feed dies, something else begins broadcasting its own sigil.Angelic Seals designed in Photoshop

Here, the imagery blurs truth and madness, suggesting the infection has reached the viewer’s mind, rewriting their nervous system as they, too, begin to change.Looping sigil animation created through time remapping and layered stacking to build rhythm and visual tension.

Displacement maps and analog distortion applied to introduce digital decay, textures that corrode the signal until it feels infected.

Ritual of Decay: The Breakdown of Reality

The final stage of Green Eye Fever was about degradation, not perfection. I wanted the film to feel recovered, like corrupted footage that somehow survived the collapse. Each distortion pass became a metaphor for infection, breaking digital clarity into something organic and unstable.

Original Midjourney Render

Edge and wave distortion

Chromatic Aberration

Interlacing

Color Correction

Each layer is its own symptom, texture as decay, color as contamination, noise as heartbeat.

Built in After Effects, the static and VHS bleed were handcrafted using fractal noise, warp displacement, and analog blur. The goal wasn’t realism; it was resonance — to make digital corruption feel tactile, human, and haunted.

Procedural Static (Fractal Noise)

Procedural Noise 1

Procedural Noise 2

Static & Distortion Effects: The Collapse of Perception

By the film’s final act, the broadcast language has disintegrated. Color drifts, voices whisper beneath static, and the once-stable camera fractures into abstraction. Each flicker is intentional, the viewer’s mind unspooling, the Organism speaking through distortion. These effects weren’t random; they were scored like music. Fractal noise, warping, and analog degradation built a rhythm of decay that turned the tools of broadcast clarity into instruments of psychological infection.

Final renders were processed to feel analog, imperfect and unstable. Noise overlays, desaturated highlights, and subtle chroma drift made the footage feel recovered from another era.

The goal wasn’t nostalgia, it was truth through imperfection, as if the machine itself remembered what it saw.

Soundtrack of the Apocalypse

The music became the heartbeat of Green Eye Fever.

Each bass hit wasn’t just rhythm, it was the cut itself. Every sharp snare became a flash, a fracture, a pulse of violence implied rather than seen. The heavy beat allowed me to sustain a fast, cinematic rhythm even when the story’s world restricted direct action.

With censorship limiting gore or explicit violence, the score carried the aggression. The percussion became a weapon — driving montage and tension forward while keeping the pacing relentless. Each visual transition hits on the downbeat, each snare crash pushes the frame into chaos.

I built the edit around the rhythm, cutting on every bass impact and flashing frames on the snare hits. This created the illusion of physical contact, a psychological echo of combat, panic, and decay, all without showing a single act of violence.

The music didn’t accompany the film; it authored it.

Soundtrack Breakdown: Editing to the Pulse

Audio Time line edit for Score to “Green Eye Fever”

The rhythm became my violence.

Every cut, flash, and camera move was dictated by the beat, each bass hit striking like a pulse of impact the audience could feel even when they couldn’t see it. When the visuals couldn’t show blood or physical destruction, the music carried the aggression for me. The percussion drove the edits, turning motion into emotion; snare hits became flash cuts, bass drops became visual blows.Across Inferno, Underworld, and World News, the tempo evolved from heartbeat to panic, a sonic architecture that mirrored the collapse onscreen. The music didn’t sit beneath the film; it animated it, pushing tension forward with mechanical precision and human instinct. Every sequence was built around that pulse, sound first, image second, until the rhythm itself became the story.

Track 1: “INFERNO”

Used for: Opening tone tests | Role: Main theme, early score

A pulse of dread beneath the calm. “Inferno” defined the visual rhythm, its steady bass and cinematic build guided the story’s first edit passes. The track became the DNA of the film’s tempo.

Track 2: “UNDERWORLD”

Used for: Extended main theme | Role: Primary action sequence score

An expansion of “Inferno,” written to sustain tension over a longer runtime. This track anchors the film’s most kinetic sections, driving montage, conflict, and rising chaos with a relentless, percussive beat.

Track 3: “UNDERWORLD 1”

Used for: Transitional sequences | Role: Bridge between tension and collapse

A variant remix that reshapes “Inferno’s” pulse into something slower, heavier, and more decayed. It gives the audience breathing room, the illusion of calm before the next surge.

Track 4: “WORLD NEWS”

Used for: Broadcast intro / tonal shift | Role: Inciting event

A false sense of order, upbeat, polished, professional, until the music itself begins to panic. The energy peak at 1:39 drives the reveal of the WHN logo and cuts clean into the first mention of the “green ooze,” turning corporate news into ritual panic.

Track 5: “BITE OF THE FALLEN SWORD”

Used for: End credits sequence | Role: Final transmission

The last breath before the signal dies. “Bite of the Fallen Sword” closes the film with an eerie calm, the sound of faith collapsing, yet still pretending to report the truth. It ends on a single cut, a TV shutting off into silence.

Soundtrack of the Apocalypse: The Voices Beneath the Static

Each broadcast voice in Green Eye Fever was generated with ElevenLabs, crafted to sound calm, authoritative, and just a little too perfect.

I tuned tone, timing, and inflection until they felt almost human, convincing enough to trust, mechanical enough to unsettle.

Their composure became part of the horror: even as the world disintegrates, the anchors keep speaking, unblinking.

Paired with Suno’s pulse-driven score, these voices turned information into hypnosis, a chorus of calm narrating the end of reason.

When the voices finally aligned with the faces, the illusion was complete, and that’s when people stopped questioning what was real.

Renamed: Echoes from the Abyss

Viewers described the film as:

“Like the intro to a video game I’d immediately want to play.”

“It feels like you’re inside someone else’s dream.”

“I could tell it was you through how the characters moves, it’s got your rhythm.”

Some audiences instantly recognized my creative fingerprint; others had no idea it was a one-person production. Both reactions prove the point:

when done right, the human touch doesn’t vanish through AI. Instead it becomes invisible, embedded in rhythm, motion, and tone.

The Last Transmission

Green Eye Fever is a film about control, losing it, reclaiming it, and realizing both acts are part of the same creation. It captures the paradox of modern authorship: the artist guiding machines that, in turn, begin to guide the artist.

In an era where tools evolve faster than the rules that define them, Green Eye Fever stands as both warning and proof of concept. It shows that creativity hasn’t died in the machine age, it has mutated, learned to dream, and started dreaming back.